Time is a funny thing. Before you know it years, and even full decades have gone past, almost without you realizing it. It does indeed seem like time is speeding up with every new orbit one does around the sun. Once in a while it is perhaps wise then, to make a full stop, and take a look back at one’s past work and ideas. This can be useful in order to:

reflect on one’s designs and thoughts,

to learn from past failures and successes,

find inspiration and get new perspectives or discover forgotten ideas

Interviews of yours truly from 2008, 2009, and 2010.

While looking at your old portfolios and reading past diaries and sketchbooks is useful, there is nothing quite like interviews to get the condensed version of you and your work from days of yore. In 2008, 2009, and 2010 I was interviewed in three different trade magazines about my work. In chronological order: 3D World - an international magazine on everything 3D graphics, Rum - the Swedish publication on architecture, interiors and design, and Snitt - the official magazine from GRAFILL, the Norwegian association for illustrators.

In the interviews I talked about a variety of topics that were on my mind; from lessons learned from co-founding an architecture visualization start-up, the importance of diversity in background and skills in small companies, how technology will change or make jobs obsolete, the emergence and impact of digital architecture and XR technologies and a future in which the digital and meat spaces would converge. Let’s take look at some of the ideas and opinions I had and reflect on how I see things in 2022.

Balancing diversity and creativity in startups

I co-founded Placebo Effects, my second architecture visualization company, in 1999. We were three founders, Bonsak Schieldrop, Dag Meyer and myself, all architects and guys of about the same age. By 2008 we had a broader group of people with varying age, background and gender - 3D generalists, illustrator/copywriter and graphics design. We had also expanded outside of architecture into VFX and digital sets. In the 3D world article “How to launch your own studio” I reflected on how I should have realized earlier that complementing creative talent with administrative and sales talent is important if you want to grow a company.

Talent balance is very much on my mind today in my new startup Viewalk. While we are two co-founders with complimentary skill sets and backgrounds, we will need to bring in a handful of diverse talent soon to help scale our company and product. In 2008 I also reflected on the challenge of balancing the running of a business with doing creative work. Having been CEO at two of my own earlier companies while producing lots of novel visual design, this continues to be a challenge. These days I am the happiest when I can build a virtual space or game level in the Unreal Engine, but I still have to take my share of customer acquisition, networking, fundraising, HR and paying bills. In a startup you have to be comfortable wearing lots of hats every day for at least a couple of years.

My advice to myself in 2022 is to create something visual in 3D every week to ensure my creative side is fulfilled.

The digital set I designed, modeled and rendered for the TV2 Sport Arena commercial in 2008.

The creative brain versus the creative AI

The 2009 Snitt article headline was “The brain becomes most important”, which was my answer to the journalist’s question of what tool that will become my most important one in the future. I speculated about a forthcoming human machine interface allowing us to simply think something for the machine to materialize on the screen. It is both frightening and exciting at the same time to see how quickly AI tools have developed during just a couple of years. In 2019 we were exploring deep learning and neural style transfer to apply the look of Edvard Munch’s paintings to photos of his garden in Åsgårdstrand. It was time-consuming and required Python programming skills.

Neural Style Transfer using one of Edvard Munch’s paintings on a 360 photograph from the garden of his summer house in Åsgårdstrand, Norway.

In 2022 using AI “art” engines like Midjourney, DALL-E 2 or Nightcafe one can — within seconds — essentially have the machine do what I speculated 14 years ago.

A series of images produced by Midjourney to the left and DALL-E 2 to the right in response to the text prompt “A futuristic white and pale blue room with glass floor, curved walls and white strips of neon with a dancing blonde fashion model in a skin tight coctail dress, inspired by Star Wars and TRON.”

While we can’t yet actually simply think of something to have it be created, we can very quickly type a text prompt like the one above and in less than a minute, like magic, have numerous images materialize on screen depicting what was described to a certain degree. The image above was an attempt by me to have these “art-machines” create a concept image for a virtual space I designed in 2009 for a music video “I Can’t Get Over”. While one can find many beautiful architectural images created by these AI-engines online, I am struggling to make them do something closer to my design aesthetics. This I am sure has both to do with my inexperience in ‘prompt craft’ which is destined to become a new type of job, but also the current limitations of the AI technology. After all, the machines are heavily biased since they are creating images from what they have “learned” trawling all the images on the internet.

The Star Wars inspired dressing room I designed for September’s “I Can’t Get Over” music video in 2009.

The increasing democratization of content creation

In 2009 I stated that the hotshots of the future would be the creatives that mastered new technology. The lessons I take from my prediction and the current state of AI and in particular the speed of its development, is to keep experimenting with AI and figure out ways to include it in my own production pipeline. These engines are part of democratizing the visual arts, just like numerous cloud, web and app technologies have democratized publishing of text and video, allowing millions of people to express themselves on the Internet.

While the AI tech comes with many challenges including questions of what art is, who is the creator, how can material used to train the machines be sourced ethically, it also brings many new possibilities. At Viewalk we have started looking at AI “art” engines as a tool for ideation and making WIP concept art for our MVP. Below are examples of images depicting various types of achievements, created by my co-founder Bengt Ove using Midjourney.

A series of concept art images for the prototype achievement system in Viewalk generated by Midjourney in minutes instead of several hours (if not days) if done by a concept artist.

YouTube and the more recent SoMe platforms like Insta, Snap and TikTok have enabled anyone to create and publish photos and videos for the whole world to see. Now anyone can basically create “visual art”. The next step for AI “art” engines will be to create fully realized 3D scenes, and then subsequently animations and eventually fully interactive worlds and content. It will take some time since just a simple 3D object is exponentially harder to create than a 2D image. This will require the machine to understand how to build things in 3D that can not be seen in a single image based on familiarity, if using a 2D to 3D approach.

We are seeing the start of this in the many avatar tools that creating full 3D heads of people from a single photograph, which is possible since the engines have been trained on millions of photos of people to “understand” what a 3D head should look like even if it cannot see all of the details in the 2D image. This feels like magic and reminds me of the seminal scene in Blade Runner in which Deckard uses the Esper machine to analyze a photo to find a hidden clue reflected in the mirror.

A non-existing person generated by AI on the left and the AI-generated 3D avatar of the same “person” by ReadyPlayerMe.

The photo in Blade Runner that was searched for hidden clues in the scene that were unseen from the vantage point of the photograph.

Startups like Norwegian Sloyd are creating tools that while not AI allow you to automatically create procedural 3d models on the fly. In the not so distant future it will become increasingly easier for anyone to create interactive content and publish it for millions of people to engage with it. This will be similar to the many mini-games found on platforms like Rec Room and Roblox, with an even lower entrance barrier than these platforms provide today.

I believe that the future for Viewalk will very much be about this - user generated interactive content - allowing anyone that wants to participate in content creation to create, play and hang out in worlds of their own making with friends and strangers.

Our headset augmented future is still years away

When I in 2009 started looking into Augmented Reality, it was inspired by research done at The Oslo School of Architecture and Design. This was the first time since I started my career in a VR-startup in Houston in the mid 90’s that I revisited XR-technologies. In the Snitt-article I speculated about a future in which the virtual and physical spaces melded together into one, with layers of data invisible to the naked eye, overlaid onto the real world with MR-glasses, similar to the fictional world in the 2006 novel “Rainbows End” by Vernor Vinge.

The VR headset I developed content for in Houston in 1994 and photo from one of the events we did when I was working at the startup CyberSim.

I said that the most important task in the future, related to visual design and communication, would be visualizing information. Given the vast and increasing amount of data created in the world, I think this very much still holds true. It will likely be AI engines, rather than information designers that will decipher noise into comprehensible information to help humans make the right decisions.

The technologies of modern smartphones, cheap sensors and small high resolution displays, were in large part enablers for Oculus and others from 2012 to bring XR-technologies to ‘ordinary’ people outside of the research, defense and medical industry. While the combination of visualizing information and Mixed Reality glasses is an extremely powerful one, we are nowhere near a reality in which cheap and good MR-glasses are available to consumers. For these technologies to become mainstream, the devices needs to become much cheaper, smaller with attractive designs (think cool sunglasses), and solve challenges that are barriers to all day use, like the vergence-accommodation mismatch.

While the Magic Leap 2 is the current state-of-the-art mixed reality glasses they are neither cheap nor something most people would want to be seen with on their heads or wear for hours every day.

Snap’s Spectacles are closer to a form factor appealing consumers but both the current and next iteration of the glasses are for developers only.

Architects and architecture in virtual spaces

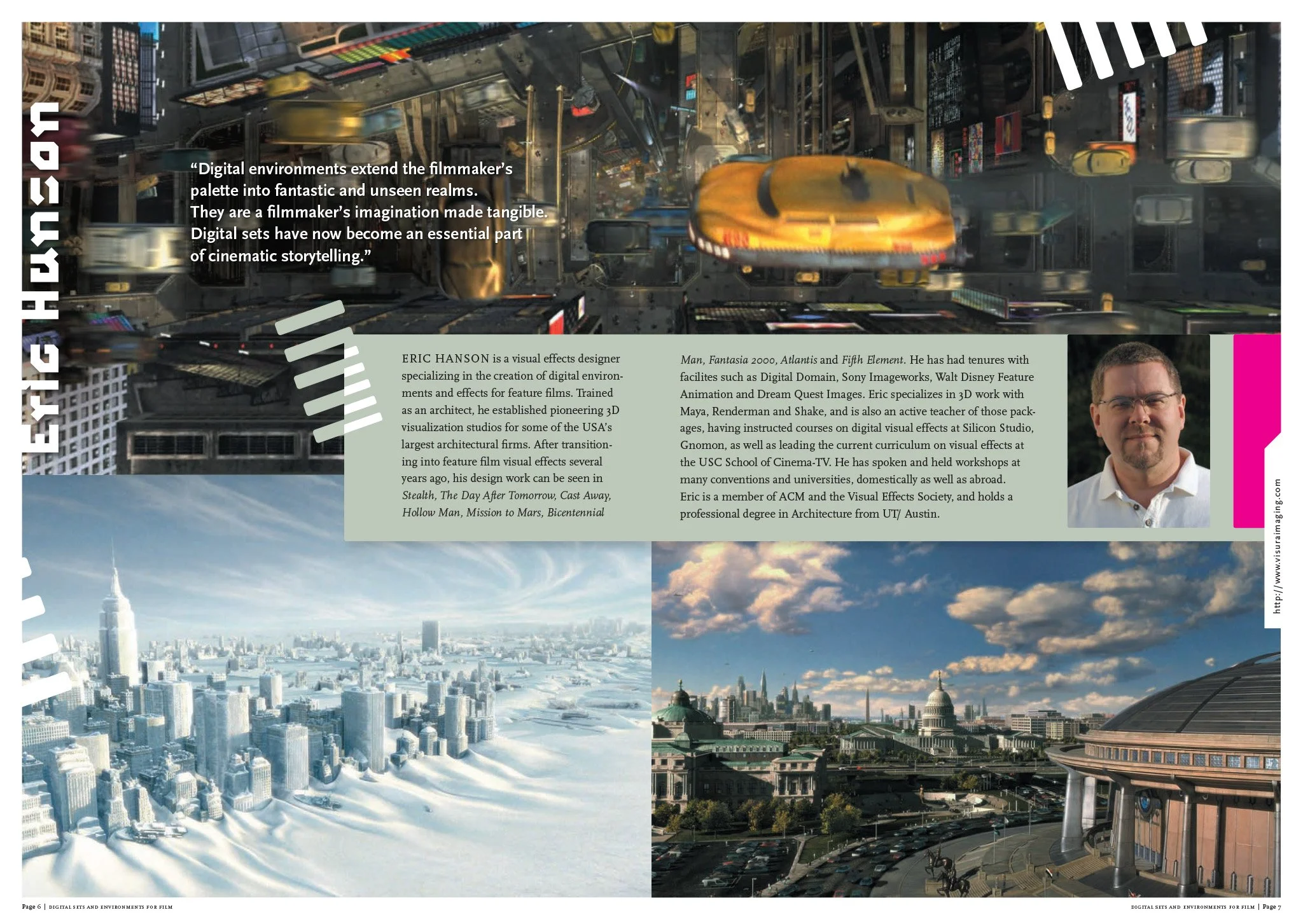

In other blog posts I have argued why it makes sense for architects to design virtual spaces, so I want discuss this topic here. When I was interviewed for the Swedish magazine RUM in 2010, fresh on my mind was a seminar on digital architecture where DICE talked about the architecture of the seminal 2008 computer game Mirror’s Edge. That and the two Best Visual Effects Awards my company had taken home for the before mentioned music video for September and the commercial for TV2 Sport. I was hopeful that architects could and would be the ones driving the evolution of virtual spaces and architecture. This was no doubt impacted by the growing importance of digital sets in film, seen in movies like The Fifth Element and The Day After Tomorrow, with ground breaking work by architects turned VFX-designers like Eric Hanson.

I stated that “In conjunction with film- and game projects becoming more and more advanced and requiring more and more specialized competence, I think that more and more architects will work with digital architecture just as well as with constructions of steel and stone.”

Program spread from the Digital Storytelling 2006 conference I organized with a collage of work and images from Eric Hanson from films like The Fifth Element, The Day After Tomorrow and Bicentennial Man.

With their clean, abstract futuristic style the virtual architecture of Mirror’s Edge by DICE continues to be a great inspiration for both me and my co-founder Bengt Ove Sannes at Viewalk.

While virtual or digital architecture has indeed become even more commonplace than 12 years ago with big movies like TRON 2.0 and Oblivion, both directed by former architect turned director Joseph Kosinski and, in more recent years the massive influx of virtual production and lately web3 virtual worlds, it is VFX, games and 3D artists that have been the ones that are designing most of these spaces, not architects.

There are a few notable exceptions, like Samuel Arsenault-Brassard whom created the groundbreaking virtual gallery Museum of Other Realities aka MOR. It is one of very few virtual architectural spaces that in my opinion in and of itself is a work of art. One will get the most powerful experience of MOR if visiting in a VR headset. Then there is architect turned XR-chitect Alex Coulombe, experienced in architecture and theater design, and connecting performers with audiences across the “Metaverse” streaming high resolution worlds to 2D and VR-devices. Andreea Ion Cojocaru of Numena is a licensed architect and software developer that explores the intersection of traditional architecture and VR/AR. Her theoretical and philosophical work is at the forefront in discussing how we can advance virtual spaces beyond replicas of gaming worlds and physical reality.

Museum of Other Realities designed by architect Samuel Arsenault-Brassard.

There is also the British Metaxu Studio headed up by architect Pierre-François Gerard. Exploring web based virtual architecture on the Hubs platform, they have created a number of unique and evocative architectural spaces that can be visited both in a web browser and a VR headset. Some of their early works included a series of gallery exhibits, one of which I was invited to exhibit one of my virtual architectural spaces, “The Machine Stops”.

Capture of the Hubs based virtual art gallery by Metaxu Studio where I exhibited a model and images of my design for the interactive VRchat theatre experience “The Machine Stops”.

When it comes to more traditional architects, the examples of work are far and few between. Architect Shoshana Kahan and her studio Unbuilt XYZ are creating a platform for digital architecture. Supporting a number of ‘Metaverse’ platforms they are partnering with IRL architects and monetizing conceptual architecture using NFT technology. There is Bjarke Ingels Group’s virtual headquarters for Vice Media, that made the news this spring. It was newsworthy not for its cool design, but for its inability to take advantage of the new possibilities of virtual space. Zaha Hadid Architects announced a virtual city project earlier this year which, like BIG’s project, seems solidly rooted in traditional architectural design.

Show me the money

Why haven’t more architects flocked to creating virtual architectural spaces? I think it is a combination of a number of factors. Money: the use case has yet to emerge and so there is not an abundance of paying gigs to drive innovation unless one is working in a venture-funded Web3 startup or at a large, classic game studio. This is reflected in how most of the architects I know — unlike their “meat space” counterparts —do not have teams of other architects working full-time designing virtual spaces. Skills: while most younger architects today are well-versed in 3D architectural tools like SketchUp and Revit and entertainment tools like Maya or 3d Studio Max, one needs an understanding of game technologies and engines to create virtual architecture. It is simply not enough to design a virtual construction, most often one also needs to help build it and light it for real-time use. Prestige; there are essentially no virtual architectural spaces of world fame yet, and I think most architects find it more prestigious to create constructions of stone, wood and steel; monuments that will leave a legacy.

We are starting to see these skills being taught in architecture schools however, like the Harvard course by Eric de Broche des Combes of Luxigon fame, Immersive Landscape: Representation through Gaming Technology. He recently started, with his long time friend Joshua Ramus from REX, an experimental research group, Holly13, dedicated to virtual and digital architecture. Architect and doctoral researcher Kai Reaver have been teaching students in creative technologies with XR at universites like Leopold-Franzens Universität Innsbruck, HEAD Haute École d'Art et de Design in Geneva, and the Oslo School of Architecture and Design.

We are also seeing virtual architecture on the radar of architecture competitions. In 2019 Bee Breeders (now Buildner Architecture Competitions) arranged a competition for a virtual architecture museum, which I entered and got an honorary mention for my project, Elevate. This year saw a new architecture competition by the same company, titled Virtual Home.

My honorable mention competition entry Elevate from 2019.

Magic on your phone

In 2009 I had already started exploring novel ways of creating interactive architectural and entertainment experiences using 360 images and Flash and HTML. The concept, which I named ‘Universal Panoramas: narrative, interactive panoramic universes on the Internet’ was presented at the SIGGRAPH computer graphics conference in New Orleans. In Placebo Effects, my company at the time, it would take a couple of more years before we got any commercial traction for interactive content.

In 2011, the year following my interview in Snitt, something truly magic happened. There was a new “kid” on the block. A powerful new mobile AR SDK called String came to market and we discovered the game engine Unity. The first mobile AR prototype we created was an attempt at visualizing architecture at 1:1 scale. It required the printing and hanging of a huge fiducial marker image on the facade of the building in order to replace the facade with a new virtual building.

MARAD - Mobile AR Architectural Design - was a mobile AR prototype created at Placebo Effects in 2011 using Unity and the String AR SDK.

This work led us to create a novel interactive mobile AR marketing experience for Tine, the largest dairy producer in Norway in collaboration with ad agency Try. Its success was in no way guaranteed since it required three things to work; a compatible smartphone, a milk carton and the download of an app. Nevertheless it became hugely popular, hitting #1 on the Norwegian app store and downloaded more than 110 000 times, a feat in a country of then only 5 million people. Shortlisted for Cannes Lions in two categories, developed at the end of 2011 and launched early 2012 it was presented at SIGGRAPH and the XR conference ISMAR in 2012, and at SIGGRAPH Asia in Hong Kong in 2013.

Today mobile AR has come much, much further. We are starting to see solutions to challenges that were apparent in our demo, like occlusion of live objects with respect to the augmented content, and we no longer need large known image markers to track the world with a smart phone thanks to technologies like ARkit and ARcore.

The interactive narrative TineMelk AR app we created in 2012 (published in early 2013).

Immersive mobile experiences

I started writing this post to reflect on old designs and thoughts, to see if I could learn from my past failures and successes, find inspiration and get new perspectives and maybe discover old insights I had forgotten. I did this all in the name of helping me set a new course for my own and my company’s future. The thing is, I already jump-started a new course last year when I lco-founded Viewalk, after being blown away by a surprisingly immersive and engaging prototype on a smartphone developed by my co-founder in the summer of 2020. I truly had not expected that a “handheld VR” experience could create such a level of immersion and engagement

Minds in motion

There is something magic about walking on your own feet in a virtual world. It is the most natural thing to do, yet for immersive headset based VR it is almost impossible to experience unless you are at a location based VR center with Quest headsets or a backtop PC and HMD strapped to your body. For all the goodness of VR, most experiences today are largely motionless apart from turning your body and waving your hands. Yes, you can teleport or virtually move your body with a joystick but it is not the same as physically moving your body through space.

Humans are experts at walking on two feet, and some scholars like Shane O’Mara, author of “In Praise of Walking”, think it as much of a defining factor in the evolution of mankind as language. Once we have learned to walk it requires minimal mental effort, and all the input needed to keep walking comes from the movement and body itself, something we can clearly see in people with vision impairments still being able to walk perfectly. The selective memories of our wanderings are central components of our experiences and ability to make maps of the world we have experienced.

Gaming is good for you, sitting is not

Besides being plain fun, “research has shown that video games can help people see better, learn more quickly, develop greater mental focus, become more spatially aware, estimate more accurately, and multitask more effectively. Some video games can even make young people more empathetic, helpful and sharing”.*

As a lifetime gamer, 3D designer and computer geek I already have too many hours of sitting in a chair in front of a monitor or on a coach near a TV. As much as I love computer graphics and gaming, I don’t enjoy that much the sedentary lifestyle it brings with it. While there is nothing quite like experiencing physical gaming in VR with genre defining titles like Half-Life: Alyx and Beat Saber, the challenge for me and many people with headset based VR, is discomfort. This stems both from the ergonomic aspects of the headsets, the eye strain caused by the vergence-accomodation conflict and less than optimal optics. Discomfort and less than ideal UX have been major blockers to a wider adoption of VR since the birth of the technology.

With Viewalk you walk comfortably on your own two feet to move your virtual character in real-time using a device you already have, a smartphone. Combined with spatial audio, the sense of immersion provided by moving your own body, ducking and weaving and sometimes even sprinting, is both liberating and powerful.

The Viewalk platform consists of a series of mini-games set in fully realized virtual worlds that you explore by walking on your own two feet wherever you are.

The power of user generated content

As an architect there is something very fulfilling about creating a space, a world made from one’s imagination and big toy box of building blocks. It reminds me of being a kid playing with crates full of LEGO. While it certainly helps having professional design training, one does not need to be a pro designer to create virtual worlds, or even games. If we look to some of the most popular games and Metaverse-like platforms on the market today like Roblox, Minecraft and Rec Room - it becomes abundantly clear to me that the future of interactive content will be in user generated worlds and games.

Fortnite, the juggernaut game that have brought so much fortune to Epic Games accelerating the development and unique technologies seen in Unreal Engine 5, has had a creator mode since 2016. It allows anybody to create their own versions of the game or modify worlds that others have created. The long awaited 2.0 version is due late this year and it is speculated to be the next revolution in gaming, and where Epic Games go many will follow.

“Tropical Construction” (official name to be determined) - the game level I am currently designing as a lobby for people to hang out and learn to play paintball in Viewalk.

Predicting the future

To recap, I started this post by revisiting interviews with and predictions by my younger self from 13-14 years ago, and I even went all the way back to the mid 90’s when I first started working professionally with XR-technologies.

Predicting the future is impossibly hard. “Most people overestimate what they can achieve in a year and underestimate what they can achieve in ten years.”, is a quote often attributed to Bill Gates. It is also attributed to the Stanford computer scientist Roy Amara. Some time in the 1960s he told colleagues that “we overestimate the impact of technology in the short-term and underestimate the effect in the long run.”

Why is this so? Humans have difficulties getting a grasp on exponential growth since we underestimate what happens when a value keeps doubling. This is exactly what is happening with the development of technology. While Moore’s Law might be outdated, the speed of technological innovation has been steadily increasing since humans first started shaping tools and creating art, and it is not slowing down.

With the recent first year of our startup fresh in mind I can attest to myself overestimating what we would achieve in a year in terms of finding our product market fit - we are not quite were we want to be yet. I can also say that I more than a decade ago underestimated how far AI technologies would get in 10 years.

What’s next?

While I can’t make a claim of being able to predict the future, I can see some trends, and make some assumptions based on my insights and what happened versus what I thought would happen all those years ago.

Spatial computing is the future of human computer interaction: I have no doubt about that, but it is going to take a lot longer than most people hope and think for it to become commonplace.

Mobile first: it took 50-60 years for the mobile phone to become ubiquitous from first commercial product in the 40s. I think it will take decades for something new to replace smart phone being equally cheap and easy to use.

Software only approaches for the win: Innovations in software will move infinitely faster than hardware (AI is one example although it is dependent on innovations in hardware too). Making your business dependent on early adopters of new hardware (traditional XR) will slow you down .

The creative fields are ripe for disruption: Digitization will touch jobs we previously thought not to be that affected. Think architecture, VFX, visual art, game development and also music. Learning to use AI and other tools to automate and speed up creative work will be key if you want to stay in the game.

Democratization of 3D and interactive on the rise: when I started my career, creating beautiful digital images and 3D models of architects’ 2D drawings was hard and time consuming. Coding something interactive was even harder. Game engines like the Unreal Engine is becoming increasingly easier to use. User generated 3D and interactive content will grow exponentially as tools become easier to use and gaming and gamification more prevalent.

In summary, what’s next? Things will speed up and build on what we have now and the faster the new digital tools are taken up for creative work, the faster we will see what we are looking for in Interactive Content.

Midjourney’s attempt at predicting the future.

* BBC article by Nic Fleming, 26th August 2013

Links to some of the companies, architects and artists mentioned in my post:

Sloyd - the automated 3D modelshop > https://sloyd.ai/

Eric Hanson’s Blueplanet VR > https://blueplanetvr.com/

Andreea Ion Cojocaru’s Numena > https://www.numena.de/

Samuel Arsenault-Brassard and the architecture of MOR > https://www.samuelab.com/portfolio-xr-architecture/mor

Alex Coulombe’s Agile Lens and Heavenue > https://www.heavenue.io/ and https://www.agilelens.com/

Metaxu Studio > https://metaxu.studio/

Eric de Broche des Combes Harvard course > http://www.ericdebroche.com/havard-gsd

Kevin Mack’s VR art > http://www.kevinmackart.com/

Virtual Home architecture competition > https://architecturecompetitions.com/virtualhome/

Zaha Hadid Architects’ virtual city > https://www.architecturaldigest.com/story/zaha-hadid-architects-building-virtual-city-metaverse

Viewalk > https://viewalk.com/